Archive

Book presentation at the USC Python Users Group

Have a child, plant a tree, write a book

Or more importantly: rear your children to become nice people, water those trees, and make sure that your books make a good impact.

I recently enjoyed the rare pleasure of having a child (my first!) and publishing a book almost at the same time. Since this post belongs in my professional blog, I will exclusively comment on the latter: Learning SciPy for Numerical and Scientific Computing, published by Packt in a series of technical books focusing on Open Source software.

Keep in mind that the book is for a very specialized audience: not only do you need a basic knowledge of Python, but also a somewhat advanced command of mathematics/physics, and an interest in engineering or scientific applications. This is an excerpt of the detailed description of the monograph, as it reads in the publisher’s page:

It is essential to incorporate workflow data and code from various sources in order to create fast and effective algorithms to solve complex problems in science and engineering. Data is coming at us faster, dirtier, and at an ever increasing rate. There is no need to employ difficult-to-maintain code, or expensive mathematical engines to solve your numerical computations anymore. SciPy guarantees fast, accurate, and easy-to-code solutions to your numerical and scientific computing applications.

Learning SciPy for Numerical and Scientific Computing unveils secrets to some of the most critical mathematical and scientific computing problems and will play an instrumental role in supporting your research. The book will teach you how to quickly and efficiently use different modules and routines from the SciPy library to cover the vast scope of numerical mathematics with its simplistic practical approach that is easy to follow.

The book starts with a brief description of the SciPy libraries, showing practical demonstrations for acquiring and installing them on your system. This is followed by the second chapter which is a fun and fast-paced primer to array creation, manipulation, and problem-solving based on these techniques.

The rest of the chapters describe the use of all different modules and routines from the SciPy libraries, through the scope of different branches of numerical mathematics. Each big field is represented: numerical analysis, linear algebra, statistics, signal processing, and computational geometry. And for each of these fields all possibilities are illustrated with clear syntax, and plenty of examples. The book then presents combinations of all these techniques to the solution of research problems in real-life scenarios for different sciences or engineering — from image compression, biological classification of species, control theory, design of wings, to structural analysis of oxides.

The book is also being sold online in Amazon, where it has been received with pretty good reviews. I have found other random reviews elsewhere, with similar welcoming comments:

- Artificial Intelligence in Motion by Marcel Caraciolo

- The Endeavour, by John D. Cook

Which one is the fake?

|

|

|

“Crab on its back” | “Willows at sunset” | “Still life: Potatoes in a yellow dish” |

Buy my book!

Well, ok, it is not my book technically, but I am one of the authors of one of the chapters. And no, as far as I know, I don’t get a dime of the sales in concept of copyright or anything else.

As the title suggests (Modeling Nanoscale Imaging in Electron Microscopy), this book presents some recent advances that have been made using mathematical methods to resolve problems in electron microscopy. With improvements in hardware-based aberration software significantly expanding the nanoscale imaging capabilities of scanning transmission electron microscopes (STEM), these mathematical models can replace some labor intensive procedures used to operate and maintain STEMs. This book, the first in its field since 1998, covers relevant concepts such as super-resolution techniques (that’s my contribution!), special de-noising methods, application of mathematical/statistical learning theory, and compressed sensing.

We even got a nice review in Physics Today by Les Allen, no less!

Imaging with electrons, in particular scanning transmission electron microscopy (STEM), is now in widespread use in the physical and biological sciences. And its importance will only grow as nanotechnology and nano-Biology continue to flourish. Many applications of electron microscopy are testing the limits of current imaging capabilities and highlight the need for further technological improvements. For example, high throughput in the combinatorial chemical synthesis of catalysts demands automated imaging. The handling of noisy data also calls for new approaches, particularly because low electron doses are used for sensitive samples such as biological and organic specimens.

Modeling Nanoscale Imaging in Electron Microscopy addresses all those issues and more. Edited by Thomas Vogt and Peter Binev at the University of South Carolina (USC) and Wolfgang Dahmen at RWTH Aachen University in Germany, the book came out of a series of workshops organized by the Interdisciplinary Mathematics Institute and the NanoCenter at USC. Those sessions took the unusual but innovative approach of bringing together electron microscopists, engineers, physicists, mathematicians, and even a philosopher to discuss new strategies for image analysis in electron microscopy.

In six chapters, the editors tackle the ambitious challenge of bridging the gap between high-level applied mathematics and experimental electron microscopy. They have met the challenge admirably. I believe that high-resolution electron microscopy is at a point where it will benefit considerably from an influx of new mathematical approaches, daunting as they may seem; in that regard Modeling Nanoscale Imaging in Electron Microscopy is a major step forward. Some sections present a level of mathematical sophistication seldom encountered in the experimentally focused electron-microscopy literature.

The first chapter, by philosopher of science Michael Dickson, looks at the big picture by raising the question of how we perceive nano-structures and suggesting that a Kantian approach would be fruitful. The book then moves into a review of the application of STEM to nanoscale systems, by Nigel Browning, a leading experimentalist in the field, and other well-known experts. Using case studies, the authors show how beam-sensitive samples can be studied with high spatial resolution, provided one controls the beam dose and establishes the experimental parameters that allow for the optimum dose.The third chapter, written by image-processing experts Sarah Haigh and Angus Kirkland, addresses the reconstruction, from atomic-resolution images, of the wave at the exit surface of a specimen. The exit surface wave is a fundamental quantity containing not only amplitude (image) information but also phase information that is often intimately related to the atomic-level structure of the specimen. The next two chapters, by Binev and other experts, are based on work carried out using the experimental and computational resources available at USC. Examples in chapter four address the mathematical foundations of compressed sensing as applied to electron microscopy, and in particular high-angle annular dark-field STEM. That emerging approach uses randomness to extract the essential content from low-information signals. Chapter five eloquently discusses the efficacy of analyzing several low-dose images with specially adapted digital-image-processing techniques that allow one to keep the cumulative electron dose low and still achieve acceptable resolution.

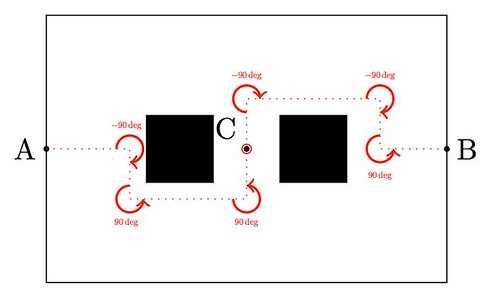

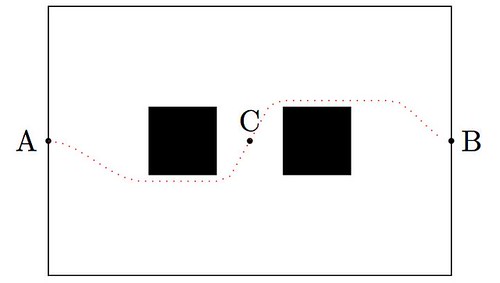

The book concludes with a wide-ranging discussion by mathematicians Amit Singer and Yoel Shkolnisky on the reconstruction of a three-dimensional object via projected data taken at random and initially unknown object orientations. The discussion is an extension of the authors’ globally consistent angular reconstitution approach for recovering the structure of a macromolecule using cryo-electron microscopy. That work is also applicable to the new generation of x-ray free-electron lasers, which have similar prospective applications, and illustrates nicely the importance of applied mathematics in the physical sciences.

Modeling Nanoscale Imaging in Electron Microscopy will be an important resource for graduate students and researchers in the area of high-resolution electron microscopy.

(Les J. Allen, Physics Today, Vol. 65 (5), May, 2012)

|

|

|

| Table of contents | Preface | Sample chapter |

Sometimes Math is not the answer

I would love to stand corrected in this case, though. Let me explain first the reason behind this claim—It will take a minute, so bear with me:

I would love to stand corrected in this case, though. Let me explain first the reason behind this claim—It will take a minute, so bear with me:

Say there is a new movie released, and you would like to know how good it is, or whether you and your partner will enjoy watching it together. There are plenty of online resources out there that will give you enough information to make an educated opinion but, let’s face it, you will not have the complete picture unless you actually go see the movie (sorry for the pun).

For example, I fell for “The Blair Witch Project:” their amazing advertising campaign promised me thrill and originality. On top of that, the averaged evaluation of many movie critics that had access to previews claimed that this was a flick not to be missed… Heck, I even bought the DVD for my sister before even watching it!—She and I have a similar taste with respect to movies. The disappointment was, obviously, epic. Before that, and many a time afterwards, I have tripped over the same stone. If nothing else, I learned not to trust commercials and sneak previews any more (“Release the Kraken!

,” anyone?)

The only remaining resource should then be the advice of the knowledgeable movie critics—provided you trust on their integrity, that is. Then it hit me: My taste in movies, so similar to my sister’s, could be completely different to that of the “average critic”. Being that the case, why would I trust what a bunch of experts have to say? The mathematician in me took over, and started planning a potential algorithm:

So you want to be an Applied Mathematician

The way of the Applied Mathematician is one full of challenging and interesting problems. We thrive by association with the Pure Mathematician, and at the same time with the no-nonsense, hands-in, hard-core Engineer. But not everything is happy in Applied Mathematician land: every now and then, we receive the disregard of other professionals that mistake either our background, or our efficiency at attacking real-life problems.

I heard from a colleague (an Algebrist) complains that Applied Mathematicians did nothing but code solutions of partial differential equations in Fortran—his skewed view came up after a naïve observation of a few graduate students working on a project. The truth could not be further from this claim: we do indeed occasionally solve PDEs in Fortran—I give you that—and we are not ashamed to admit it. But before that job has to be addressed, we have gone through a great deal of thinking on how to better code this simple problem. And you would not believe the huge amount of deep Mathematics that are involved in this journey: everything from high-level Linear Algebra, Calculus of Variations, Harmonic Analysis, Differential Geometry, Microlocal Analysis, Functional Analysis, Dynamical Systems, the Theory of Distributions, etc. Not only are we familiar with the basic background on all those fields, but also we are supposed to be able to perform serious research on any of them at a given time.

My soon-to-be-converted Algebrist friend challenged me—not without a hint of smugness in his voice—to illustrate what was my last project at that time. This was one revolving around the idea of frames (think of it as redundant bases if you please), and needed proving a couple of inequalities involving sequences of functions in —spaces, which we attacked using a beautiful technique: Bellman functions. About ninety minutes later he conceded defeat in front of the board where the math was displayed. He promptly admitted that this was no Fortran code, and showed a newfound respect and reverence for the trade.

It doesn’t hurt either that the kind of problems that we attack are more likely to attract funding. And collaboration. And to be noticed in the press.

Alright, so some of you are sold already. What is the next step? I am assuming that at his point you own your Calculus, Analysis, Probability and Statistics, Linear Programming, Topology, Geometry, Physics and you are able to solve most known ODEs. From here, as with any other field, my recommendation is to slowly build a Batman belt: acquire and devour a sequence of books and scientific articles, until you are very familiar with their contents. When facing a new problem, you should be able to recall from your Batman belt what technique could work best, in which book(s) you could get some references, and how it has been used in the past for related problems.

Following these lines, I have included below an interesting collection with the absolutely essential books that, in my opinion, every Applied Mathematician should start studying:

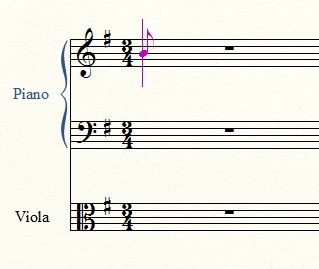

Wavelets in sage

There are no native wavelet packages in sage. But there is a great module in python that contains, among other things, forward and inverse discrete wavelet transforms (for one and two dimensions). It comes bundled with seventy-six wavelet filters, and allows support to build your own! The name is PyWavelets, written by Tariq Rashid, and can be retrieved from pypi.python.org/pypi/PyWavelets. In order to install it in sage, take the following steps:

Voronoi mosaics

While looking for ideas to implement voronoi in sage, I stumbled upon a beautiful paper written by a group of japanese computer graphic professionals from the universities of Hokkaido and Tokyo: A Method for Creating Mosaic Images Using Voronoi Diagrams. The first step of their algorithm is simple yet brilliant: Start with any given image, and superimpose an hexagonal tiling of the plane. By a clever approximation scheme, modify the tiling to become a voronoi diagram that adaptively minimizes some approximation error. As a consequence, the resulting voronoi diagram is somehow adapted to the desired contours of the original image.

|

|

|

|

| (Fig. 1) | (Fig. 2) | (Fig. 3) | (Fig. 4) |

In a second step, they manually adjust the Voronoi image interactively by moving, adding, or deleting sites. They also take the liberty of adding visual effects by hand: emphasizing the outlines and color variations in each Voronoi region, so they look like actual pieces of stained glass (Fig. 4).

Presentation: Curvelets and Approximation Theory

Find below a set of slides that I used for my talk at the IMA in the Thematic Year on Mathematical Imaging. On them, there is a detailed construction of my generalized curvelets, some results by Donoho and Candès explaining their main properties, and a bunch of applications to Imaging. Click on the slide below to retrieve the pdf file with the presentation.

Poster: Curvelets vs. Wavelets (Mathematical Models of Natural Images)

Together with Professor Bradley J. Lucier, we presented a poster in the Workshop on Natural Images during the thematic year on Mathematical Imaging at the IMA. We experimented with wavelet and curvelet decompositions of 24 high quality photos from a CD that Kodak® distributed in the late 90s. All the experiment details and results can be read in the file Curvelets/talk.pdf.

The computations concerning curvelet coefficients were carried out in Matlab, with the Curvelab 2.0.1 toolbox developed by Candès, Demanet, Donoho and Ying. The computations concerning wavelet coefficients were performed by Professor Lucier’s own codes.

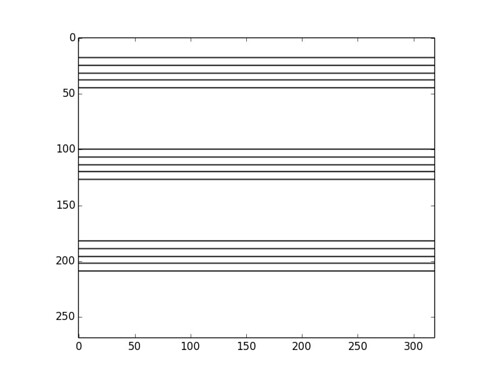

Wavelet Coefficients

To aid in my understanding of wavelets, during the first months I started studying this subject I wrote a couple of scripts to both compute wavelet coefficients of a given pgm gray-scale image (the decoding script), and recover an approximation to the original image from a subset of those coefficients (the coding script). I used OCaml, a multi-paradigm language: imperative, functional and object-oriented.

The decoding script uses the easiest wavelets possible: the Haar functions. As it was suggested in the article “Fast wavelet techniques for near-optimal image processing“, by R. DeVore and B.J. Lucier, rather than computing the actual raw wavelet coefficients, one computes instead a related integer value (a code). The coding script interprets those integer values and modifies them appropriately to obtain the actual coefficients. The storage of the integers is performed using Huffman trees, but I used a very simple one, not designed for speed or optimization in any way.

Following a paper by A.Chambolle, R.DeVore, N.Y.Lee and B.Lucier, “Non-linear wavelet image processing: Variational problems, compression and noise removal through wavelet shrinkage“, these scripts were used in two experiments later on: computation of the smoothness of an image, and removal of Gaussian white noise by the wavelet shrinkage method proposed by Donoho and Johnstone in the early 90’s.

|

|

|

|

|

|

|

|

Progressive reconstruction of a grey-scale image of size with the largest (in absolute value)

coefficients,